Setup Solaris 9/10/11 x86/64 and Solaris 10 x86 on QEMU:

--------------------------------------------------------

Background: I got the "long" qemu-system-x86_64 VM-creation command by using virt-install to create the VM and filtering the output of "ps -ef | grep qemu-system". Attempts to setup a Solaris 10 VM with a basic command resulted in the installation stuck in a reboot loop once it loads the kernel from the installation ISO.

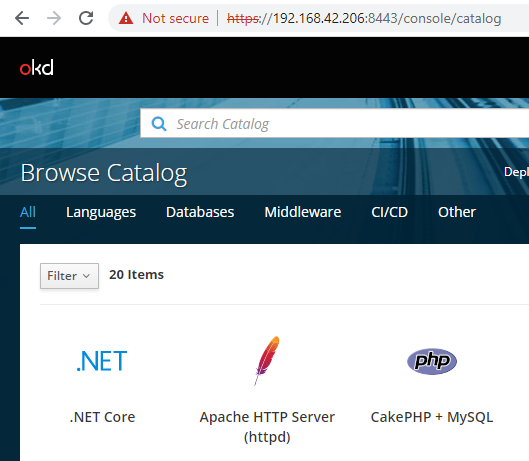

Sample virt-install command: virt-install --name s10x64 --memory 4096 --vcpus 2 --disk /s10x64/s10x64.qcow2 --cdrom /s10x64/sol-10-u1-ga-x86-dvd-iso.iso --os-variant solaris10 --graphics vnc

Setup the Ubuntu QEMU hypervisor host

-------------------------------------

- NOTE: this installed QEMU version 6.2.0 in /usr/sbin/ which I won't be using.

root@itamint:~/# apt update -y

root@itamint:~/# apt -y install bridge-utils cpu-checker libvirt-clients virtinst libvirt-daemon libvirt-daemon-system qemu qemu-kvm

root@itamint:~/# usermod -aG kvm root

root@itamint:~/# newgrp kvm

root@itamint:~/# systemctl enable libvirtd

root@itamint:~/# systemctl start libvirtd

- Setup VDE for networking:

root@itamint:~/# apt install vde2

root@itamint:~/# apt-get install libvdeplug-dev

- Compile/Install the latest QEMU (version 7.2.0) with VDE enabled in /usr/local/bin:

root@itamint:~/# apt install -y gcc make ninja-build libglib2.0-dev libpixman-1-dev ncurses-dev

root@itamint:~/# apt install -y libusb-dev libusb-1.0-0-dev libusbredirparser1 libusbredirhost1 usbutils

root@itamint:~/# wget https://download.qemu.org/qemu-7.2.0.tar.xz

root@itamint:~/# tar xf qemu-7.2.0.tar.xz

root@itamint:~/# cd qemu-7.2.0

root@itamint:~/qemu-7.2.0# ./configure --enable-vde --enable-libusb

root@itamint:~/qemu-7.2.0# make

root@itamint:~/qemu-7.2.0# make install

---------- End Of QEMU host setup -----------------------------

- Setup a network (VDE) switch that VMs are logically connected to:

- Create a VDE switch and assign it an IP address which will be the gateway of VMs (note that it goes away if you reboot the host):

root@itamint:~/# vde_switch -tap vde0 -daemon

root@itamint:~/# ip link set dev vde0 up

root@itamint:~/# ip route add 10.0.2.0/24 dev vde0

root@itamint:~/# echo 1 > /proc/sys/net/ipv4/conf/vde0/proxy_arp

- Internet access to the VMs (execute on the QEMU hypervisor host) where wlp5s0 is the WAN NIC on the Ubuntu QEMU host:

root@itamint:~/# echo 1 > /proc/sys/net/ipv4/ip_forward

root@itamint:~/# iptables -t nat -A POSTROUTING -o wlp5s0 -j MASQUERADE

root@itamint:~/# iptables -I FORWARD 1 -i vde0 -j ACCEPT

root@itamint:~/# iptables -I FORWARD 1 -o vde0 -m state --state RELATED,ESTABLISHED -j ACCEPT

---------- End of VDE network switch setup --------------------

- Location of PC BIOS ("-L /path/to/dir"). Same file, but first is from the QEMU 7.2.0 and second is from the seabios Ubuntu package.

root@itamint:~# find /usr -iname bios-256k.bin

/usr/local/share/qemu/bios-256k.bin

/usr/share/seabios/bios-256k.bin

- Create a Solaris 9 VM and install the O/S from the ISO:

---------------------------------------------------------

root@itamint:/s9x86# qemu-img create -f qcow2 s9x86.qcow2 20G

root@itamint:/s9x86# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s9x86,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s9x86/s9x86.qcow2 -boot d --cdrom /s9x86/sol-9-u8-ga-x86-dvd.iso -device i82801,netdev=net0,mac=52:54:00:e6:5d:16,bus=pci.0,addr=0x3 -netdev vde,id=net0

- NOTE: with bridge/tap/vde variants of e1000 NIC did not work. The arp table on the QEMU host shows "incomplete" for the MAC address of the VM, and no communication is possible. With NIC model i82801, it works properly. The NIC is detected by Solaris 9 as "PCI: Intel i815/82559 Ethernet" , interface name iprb0 in Solaris. This is a 10/100Mbps NIC.

- connect to the installation using a VNC client such as MobaXterm or realVNC or tigerVNC to complete the installation process.

- NOTE: if you choose the auto-reboot option, the system will reboot again from the CD at the end of the install. Break/Cancel the QEMU command then launch the VM from the O/S boot disk e.g.,

root@itamint:/s9x86# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s9x86,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s9x86/s9x86.qcow2 -device i82801,netdev=net0,mac=2a:e8:ec:b2:1f:7b,bus=pci.0,addr=0x3 -netdev vde,id=net0

- First boot after install, if using MobaXterm VNC client, switch it to "Full Screen" otherwise the desktop/login won't display properly. Once you login, you can use to skip installation of the "Java Enterprise System" after which the system will reboot again. Once you login again, you will be prompted to choose either CDE or Gnome 2.0 desktop.

- Optionally disable CDE (GUI):

bash-2.05# /usr/dt/bin/dtconfig -d

bash-2.05# mv /etc/rc2.d/S99dtlogin /etc/rc2.d/DISABLE_s99dtlogin

- Edit /etc/ssh/sshd_config file in the VM and change "PermitRootLogin no" to "PermitRootLogin yes" (do not do in Production environment)

- restart SSHD with the command "kill -HUP <sshd-pid>"

- SSH to the VM from the Ubuntu QEMU host (10.0.2.150 is what I assigned to the VM during setup):

root@itamint:~# ssh -oHostKeyAlgorithms=+ssh-rsa -oKexAlgorithms=+diffie-hellman-group1-sha1 -c 3des-cbc root@10.0.2.150

- NOTE: once the VM has network access, you can start it with the "--daemonize" so it detaches from the SHELL session on the QEMU host.

------------- End of Solaris 9 setup --------------------------------

- Create a Solaris 10 VM and install the O/S from the ISO:

----------------------------------------------------------

root@itamint:/s9x86# qemu-img create -f qcow2 /s10x64/s10x64.qcow2 20G

root@itamint:/s9x86# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s10x64,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s10x64/s10x64.qcow2 -boot d --cdrom /root/s10x86/sol-10-u8-ga-x86-dvd.iso -device e1000,netdev=net0,mac=52:54:00:e6:4d:15,bus=pci.0,addr=0x3 -netdev vde,id=net0

- Connect to the installation using a VNC client such as MobaXterm or realVNC or tigerVNC to complete the installation process.

- NOTE: if you choose the auto-reboot option, the system will reboot again from the CD at the end of the install. Break/Cancel the QEMU command then launch the VM from the O/S boot disk e.g.,

root@itamint:~/s10x86# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s10x64,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s10x64/s10x64.qcow2 -device e1000,netdev=net0,mac=52:54:00:e6:4d:15,bus=pci.0,addr=0x3 -netdev vde,id=net0

- First boot after install, if using MobaXterm VNC client, switch it to "Full Screen" otherwise the desktop/login won't display properly.

- optionally disable CDE (GUI).

bash-3.00# /usr/dt/bin/dtconfig -d

bash-3.00# svcadm disable cde-login

bash-3.00#

- Edit /etc/ssh/sshd_config file in the VM and change "PermitRootLogin no" to "PermitRootLogin yes" (do not do in Production environment)

- restart SSHD with the command "svcadm restart sshd"

- SSH to the VM from the Ubuntu QEMU host (10.0.2.105 is what I assigned to the VM during setup):

ssh -oKexAlgorithms=+diffie-hellman-group-exchange-sha1 -o HostKeyAlgorithms=ssh-rsa root@10.0.2.105

- NOTE: once the VM has network access, you can start it with the "--daemonize" so it detaches from the SHELL session on the QEMU host.

------------- End of Solaris 10 setup -------------------------------

- Create a Solaris 11 VM and install the O/S from the ISO:

----------------------------------------------------------

root@itamint:~/s10x86# mkdir /s11x64

root@itamint:~/s10x86# qemu-img create -f qcow2 /s11x64/s11x64.qcow2 8G

root@itamint:~/s10x86# cd /s11x64/

root@itamint:/s11x64# ls

s11x64.qcow2 sol-11-1111-text-x86.iso

root@itamint:/s11x64# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s11x64,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s11x64/s11x64.qcow2 -boot d --cdrom /s11x64/sol-11-1111-text-x86.iso -device e1000,netdev=net0,mac=52:54:00:e7:4e:16,bus=pci.0,addr=0x3 -netdev vde,id=net0

- Connect to the installation using a VNC client such as MobaXterm or realVNC or tigerVNC to complete the installation process.

- NOTE: if you choose the auto-reboot option, the system will reboot again from the CD at the end of the install. Break/Cancel the QEMU command then launch the VM from the O/S boot disk e.g.,

root@itamint:/s11x64# /usr/local/bin/qemu-system-x86_64 -L /usr/local/share/qemu/ -name guest=s11x64,debug-threads=on -machine pc,usb=off -accel kvm -cpu host,migratable=on -m 4096 -smp 2,sockets=2,cores=1,threads=1 -nodefaults -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x4.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x4 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x4.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x4.0x2 -device VGA,id=video0,vgamem_mb=32,bus=pci.0,addr=0x2 -hda /s11x64/s11x64.qcow2 -device e1000,netdev=net0,mac=52:54:00:e7:4e:16,bus=pci.0,addr=0x3 -netdev vde,id=net0

- After the install, I had to manually create /etc/hostname.net0

echo "s11x64" > /etc/hostname.net0

- SSH to the VM from the Ubuntu QEMU host (10.0.2.160 is what I assigned to the VM during setup):

ssh -oKexAlgorithms=+diffie-hellman-group-exchange-sha1 -o HostKeyAlgorithms=ssh-rsa itababa@10.0.2.160

- NOTE: once the VM has network access, you can start it with the "--daemonize" so it detaches from the SHELL session on the QEMU host.

------------- End of Solaris 11 setup -------------------------------

Some useful Commands:

---------------------

- 7 commands to get info about processors in Solaris:

psrinfo -pv ; isalist ; kstat -p cpu_info ; kstat -m cpu_info ; prtdiag -v ; prtpicl -v ; smbios

- Get list of supported OS types/variants

root@kvm:~# virt-install --os-variant list

- Flush ARP table in Linux:

ip -s -s neigh flush all

- Destroy a VM created by virt-install

root@itamint:~# virsh list --all

root@itamint:~# virsh destroy s10x64

root@itamint:~# virsh undefine s10x64

https://support.oracle.com/knowledge/Sun%20Microsystems/1444358_1.html

https://www.unix.com/unix-for-dummies-questions-and-answers/9130-removing-cde-openwindows.html

- Virtually mount a Solaris VM disk image:

modprobe nbd max_part=8

qemu-nbd --connect=/dev/nbd0 /s9x86/s9x86.qcow2

fdisk /dev/nbd0 -l

mkdir /tmp/sol

mount /dev/nbd0p5 /tmp/sol

ls /tmp/sol/

NOTE: the mountable slices may be different e.g., p1, p5, etc.

- to disconnect the device:

umount /tmp/sol

qemu-nbd --disconnect /dev/nbd0